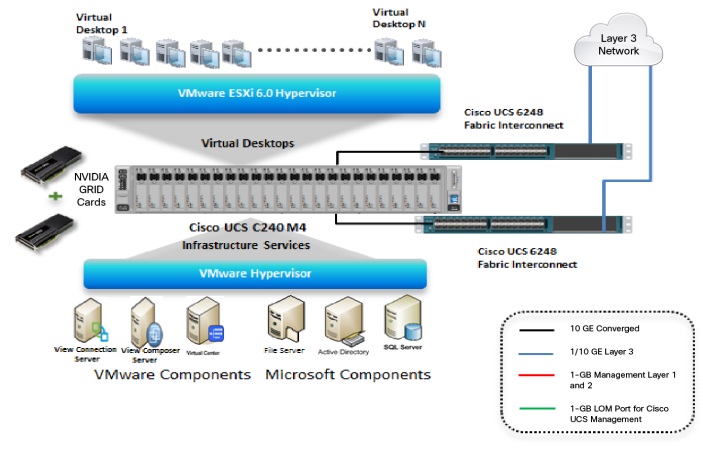

Now there is a way for users with demanding graphics processing needs to take advantage of the power and performance of virtualization. To address the requirements of these power users, Cisco is now including the NVIDIA GRID K1 and K2 cards in the Cisco Unified Computing System (Cisco UCS) portfolio of PCI Express (PCIe) cards. These cards deliver high performance when used with Cisco UCS C-Series Rack Servers.

The Cisco UCS C240 M4 Rack Servers are the newest addition to the C-Series. When combined with the full-length, full-power NVIDIA GRID cards, they deliver a new kind of high performance to graphics-intensive applications. Before, it was challenging for departments with high graphics processing requirements – such as engineering, design, imaging, and marketing – to move large files easily. Now, these users can centralize their graphics workloads and files in the data center and easily shift work geographically without any limitations.

With the PCIe graphics cards in the Cisco UCS C-Series, users can take advantage of:

Figure 1: The C 240 M4 NVIDIA GRID K1/K2 Card Architectural Design

High-performance virtualization for graphics-intensive applications

High-performance virtualization for graphics-intensive applications

Here are a few of the capabilities of the new Cisco UCS C240 M4 server that make this platform so appealing to graphics-intensive users:

- This server is the newest 2-socket, 2U rack server from Cisco, designed for both performance and expandability over a wide range of storage-intensive infrastructure workloads from big data to collaboration.

- It offers up to two Intel Xeon processor E5-2600 or E5-2600 v2 CPUs, 24 DIMM slots, 24 disk drives, and four 1 Gigabit Ethernet LAN-on-motherboard (LOM) ports.

- With the NVIDIA GRID cards, this server provides more efficient rack space than the 2-slot, 2.5-inch equivalent rack unit.

- The Cisco UCS C240 M4 server can be used standalone, or as part of Cisco UCS solutions, which unify computing, networking, management, virtualization, and storage access into a single integrated architecture. This enables end-to-end server visibility, management, and control in both bare metal and virtualized environments.

In addition, the Cisco C240 M4 server includes a modular LAN on motherboard (mLOM) slot for installation of a Cisco Virtual Interface Card (VIC) or third-party network interface card (NIC). New to Cisco rack servers, the mLOM slot can be used to install a VIC without consuming a PCIe slot, providing greater I/O expandability. The Cisco VIC 1227 incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases. It supports up to 256 PCIe interfaces to the host when configured with either NICs or HBAs. In addition, the Cisco VIC 1227 supports VM-FEX.

When configuring these options on the Cisco C240, keep these NVIDIA GPU card configuration rules in mind.

- You can mix GRID K1 and K2 GPU cards in the same server.

- Do not mix GRID GPU cards with Tesla GPU cards in the same server.

- Do not mix different models of Tesla GPU cards in the same server.

- All GPU cards require two CPUs and at least two 1400W power supplies in the server. If you need to install only 1 NVIDIA K1/K2 GRID card, use PCIE slot 2. Configure PCIe slots 2 and 5 when you have 2 NVIDIA K1/K2 GRIDs.

Support for all deployment methods

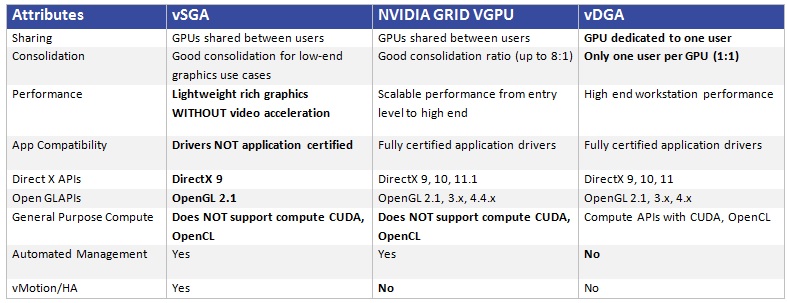

With the high-processing capacity of the Cisco UCS C240 M4, all three deployment methods from NIVIDA are supported.

Deployment capabilities

Here are a few of the capability highlights of each deployment method, including the DirectX APIs and OPEN GL requirements.

Table 1: GPU Deployment and Supported Applications

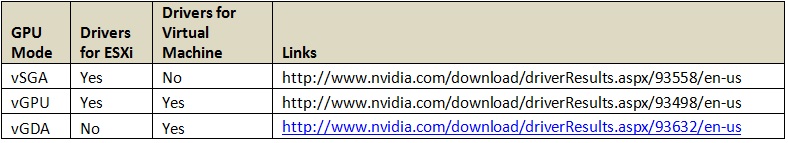

You can download the required drivers from the NVIDIA website using the links provided here.

Table 2: Driver Links

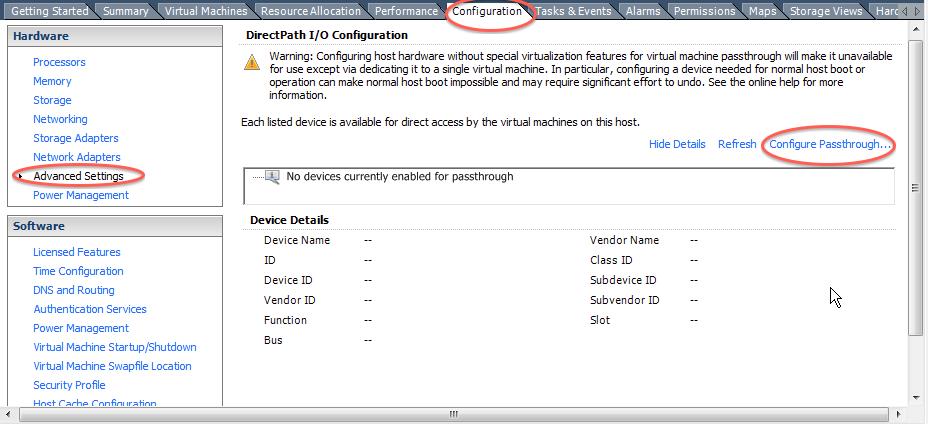

vDGA Configuration

Here is an example of how to configure the vDGA or pass-through GPU deployment on an ESXi server. Select the VMware ESXi host, choose Configuration, select the Hardware tab, and choose Advanced Settings > Configure Pass-through.

Figure 3: vDGA Configuration

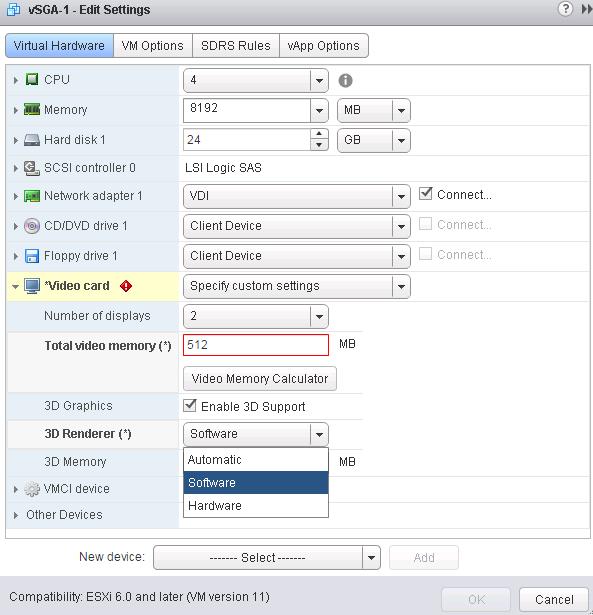

vSGA Configuration

Here is the second deployment method vSGA or virtualized software graphics acceleration. You can change the virtual machine for vSGA mode by selecting “Software” for graphics sharing.

Figure 4: Editing Virtual Machine Properties for vSGA

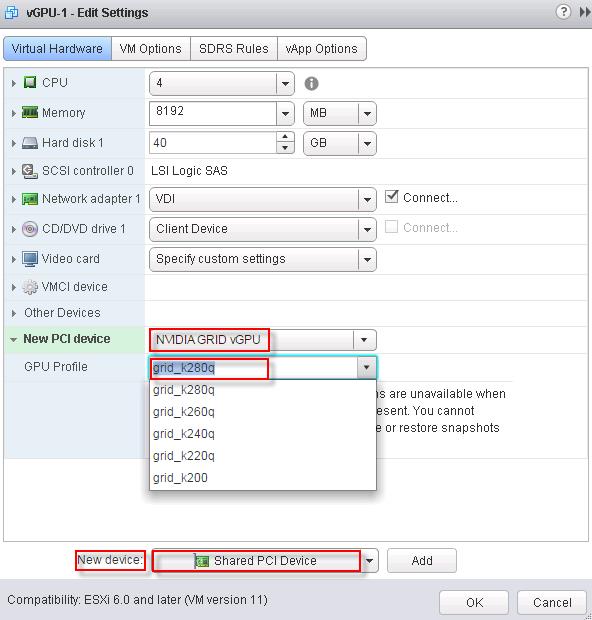

vGPU Configuration

The NVDIA GRID vGPU deployment, which is NVIDIA’s newest release, allows multiple virtual desktops to share a single physical GPU, and multiple GPUs to reside on a single physical PCI card. This provides 100% application compatibility of vDGA pass-through graphics, but at a lower cost, as multiple desktops can share a single graphics card via PCI shared mode.

In this configuration, you will need drivers for both the ESXi host and the virtual machine. The download package (see above, Table 2) comes with files for each driver.

You can select a shared PCIe device K2 card that is installed on the Cisco C240 server for different users. For more information on GPU user profiles visit: http://www.nvidia.com/object/virtual-gpus.html.

Figure 5: vGPU Profiles for the Virtual Machine

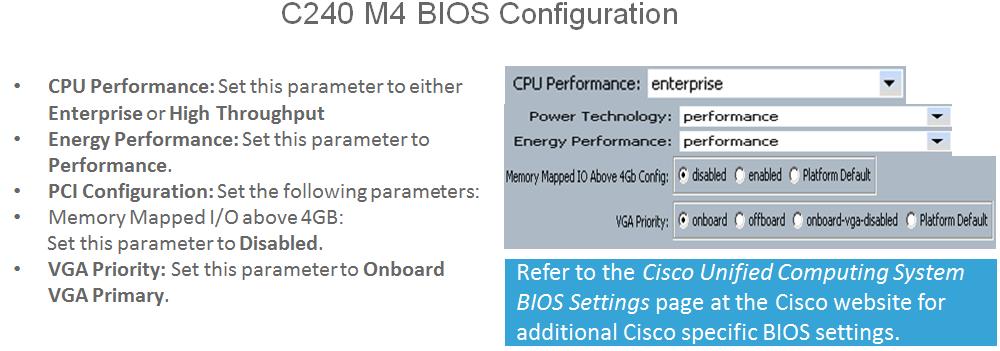

Here are the recommended Cisco C240 M4 BIOS settings for the GPU deployment.

Figure 6: C240 M4 BIOS settings

For more information

This platform from Cisco, NVIDIA, and VMware provides a high-performance platform for virtualizing graphics-intensive applications.

To learn more, you can review the full reference architecture here: http://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-c-series-rack-servers/whitepaper-c11-735450.html