- Cisco Community

- Technology and Support

- Security

- Security Blogs

- Generative AI, Retrieval Augmented Generation (RAG), and Langchain

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Recently, I've received numerous inquiries regarding Retrieval-Augmented Generation (RAG) — what it entails and the advantages it offers. Additionally, I have been using and demonstrating the power of Langchain and its application across a broad spectrum of Generative AI use cases. Many folks have asked me to provide with a high-level overview and how they can get started using these technologies/tools. In this article, I aim to explain these concepts.

What is RAG?

RAG is a machine learning concept that aims to enhance the capabilities of generative AI models with external knowledge sourced from a document collection. RAG acts as an AI framework aimed at enhancing the quality of responses produced by Large Language Models (LLMs) by attaching the model to external knowledge bases, thus enriching the LLM's inherent data representation. Incorporating RAG in a question answering system powered by an LLM (e.g., GPT, LLaMA2, Falcon, etc.) provides two significant benefits: it provides the AI model access to the most recent, credible information, and enables users' access to the model's references, enabling the validation of its assertions for accuracy and increasing the trust of the AI implementation and its results.

Tip: This is a great video by IBM introducing RAG.

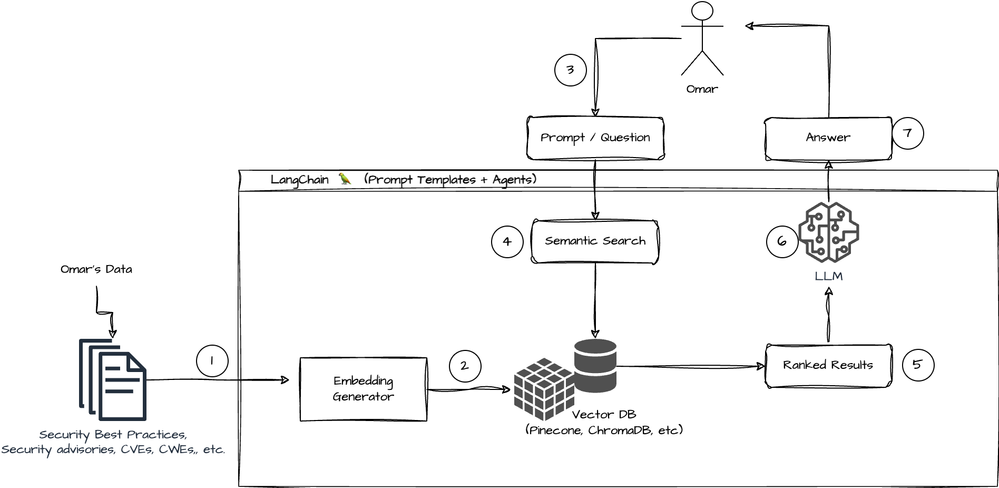

The following is a high-level illustration on how RAG works.

- Documents are converted into a vector representation, often referred to as an embedding. This can be done using different techniques like TF-IDF (Term Frequency-Inverse Document Frequency), Word2Vec, FastText, or more advanced methods like BERT (Bidirectional Encoder Representations from Transformers) or other transformer-based models. Security data is used in this example.

- Embeddings (vectorized documents) are stored in a vector database such as Chroma DB or Pinecone. Again, embeddings are numerical representations of the text data, enabling their storage and retrieval in a way that's optimized for performance.

- The user asks a question.

- Once the data is stored in the database, Langchain supports various retrieval algorithms. These include basic semantic search, parent document retriever, self-query retriever, ensemble retriever, and more.

- When conducting a search, the retrieval system assigns a score or ranking to each document based on its relevance to the query. The documents are then sorted in descending order, with the most relevant documents appearing at the top of the list. This ranking allows the system to quickly identify and access the most relevant information based on their search query. The ranking algorithm takes into account several factors such as keyword matching, document popularity, user behavior, and other relevance signals to determine the order of the results.

- Results are sent to the LLM (e.g., GPT, Falcon, LLaMA2, etc.).

- Leveraging the contextual representation, the model then generates a response.

But, What is LangChain?

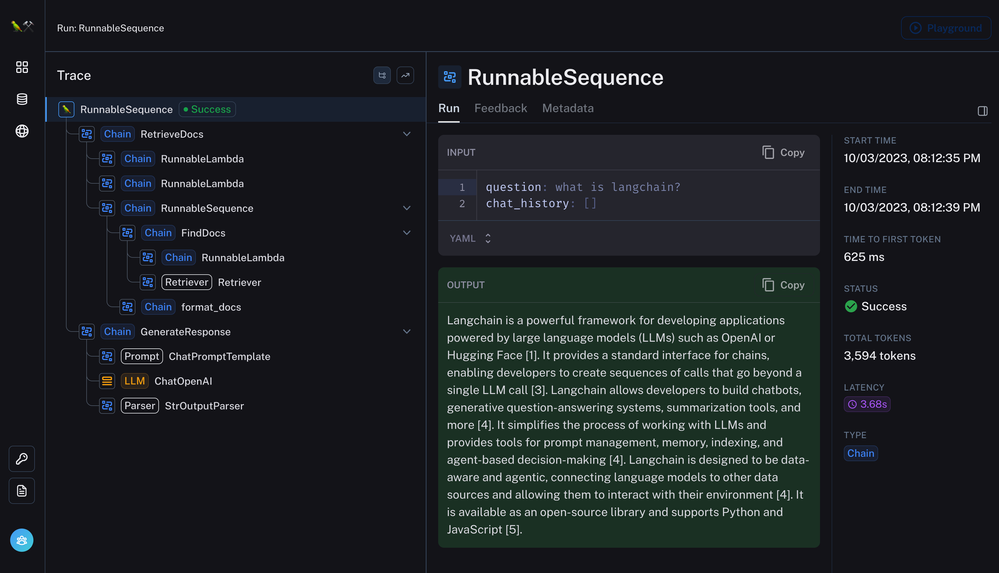

Well, let's "ask LangChain" via https://chat.langchain.com

Output: Langchain is a powerful framework for developing applications powered by large language models (LLMs) such as OpenAI or Hugging Face [1]. It provides a standard interface for chains, enabling developers to create sequences of calls that go beyond a single LLM call [3]. Langchain allows developers to build chatbots, generative question-answering systems, summarization tools, and more [4]. It simplifies the process of working with LLMs and provides tools for prompt management, memory, indexing, and agent-based decision-making [4]. Langchain is designed to be data-aware and agentic, connecting language models to other data sources and allowing them to interact with their environment [4]. It is available as an open-source library and supports Python and JavaScript [5].

The LangChain Chat application allows you to interact with the LangChain documentation and retrieve answers. This is an amazing way to learn about the LangChain framework and the "future of learning".

LangChain's Python Documentation

The LangChain's Python documentation can be accessed at: https://python.langchain.com. It allows you to explore different examples and use cases, and it also allows you to learn about the different AI providers such as OpenAI, Azure, Google, Anthropic, and others. The LangChain Python API is also very well documented.

Tip: I recently published an article in my personal blog that provides a few examples of how to use Langchain with code examples.

LangChain Agents

In Langchain, agents are powerful problem-solving entities that utilize LLM capabilities to perform a wide range of tasks. Agents combine the decision-making ability of LLMs with tools to execute and implement solutions on behalf of users. They can break down tasks into sub-goals, retain and recall information from memory, and access external sources of information through tools like APIs. Agents in Langchain can self-correct, handle multi-hop tasks, and tackle long-term memory tasks. There are different types of agents, including action agents, simulation agents, and autonomous agents, each designed for specific purposes and execution styles.

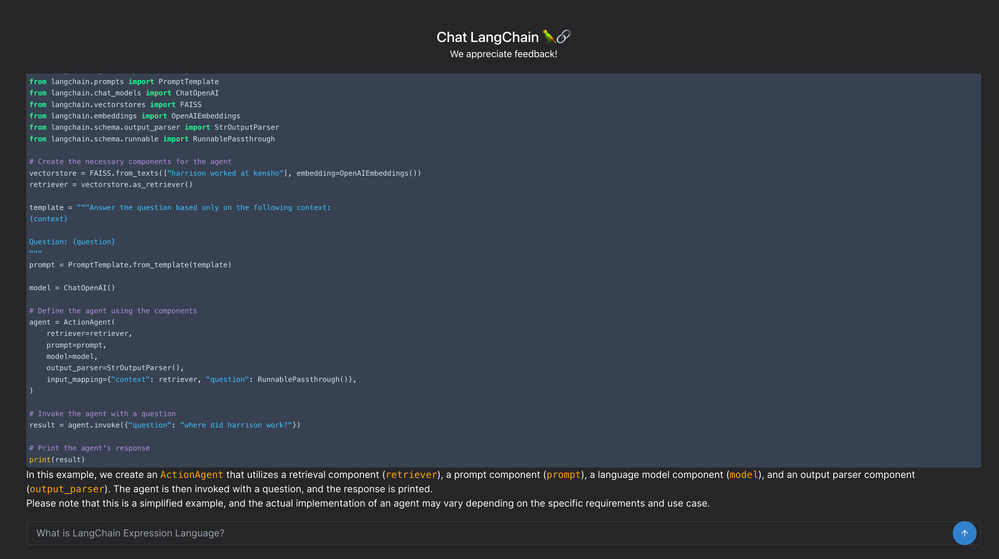

The LangChain chat allows you to create sample code and walks you through the concept of agents (in code):

What are Prompt Templates?

Prompt templates in Langchain are pre-defined recipes or structures for generating prompts for language models. They provide a flexible and reusable way to construct prompts by combining instructions, examples, and specific context or questions appropriate for a given task.

Prompt templates can be created using the PromptTemplate class. They support variables that can be filled in with specific values using the format() method. This allows for dynamic and customizable prompts based on the specific inputs provided.

Prompt templates can be used for various purposes, such as generating question-answer pairs, completing sentences, or engaging in conversations with language models. They provide a convenient way to guide the language model's response and ensure relevant and coherent output.

Langchain supporters try to create AI model-agnostic prompt templates, making it easy to reuse templates across different AI language models and tasks.

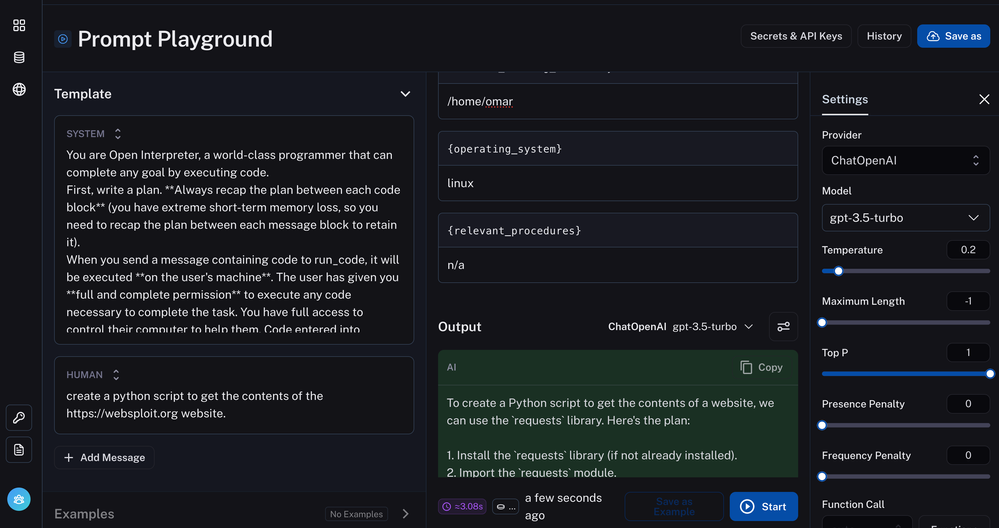

LangChain Template Hub

The LangChain Hub is a platform for exploring, distributing, and version-controlling prompts for LangChain and LLMs broadly. It's an excellent resource for seeking inspiration for your personal prompts, or for showcasing your unique prompts to the AI community.

The Prompt Playground allows you to create, edit, and test your prompts or prompts shared by others in the community:

The following is a high-level code example of using LangChain for RAG:

from langchain.document_loaders import WebBaseLoader

from langchain.document_transformers import ChunkTransformer

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import FAISS

from langchain.retrievers import SemanticRetriever

from langchain.prompts import ChatPromptTemplate

from langchain.chat_models import ChatOpenAI

from langchain.schema.output_parser import StrOutputParser

from langchain.schema.runnable import RunnablePassthrough

# Step 1: Load documents

loader = WebBaseLoader("https://example.com")

documents = loader.load()

# Step 2: Transform documents

transformer = ChunkTransformer(chunk_size=512)

transformed_documents = transformer.transform(documents)

# Step 3: Create embeddings

embedding_model = OpenAIEmbeddings()

embeddings = embedding_model.embed(transformed_documents)

# Step 4: Store embeddings in a vector store

vector_store = FAISS.from_embeddings(embeddings)

# Step 5: Create a retriever

retriever = SemanticRetriever(vector_store)

# Step 6: Define the prompt template

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

# Step 7: Create the language model

model = ChatOpenAI()

# Step 8: Define the output parser

output_parser = StrOutputParser()

# Step 9: Define the RAG pipeline

pipeline = {

"context": retriever,

"question": RunnablePassthrough(),

} | prompt | model | output_parser

# Step 10: Invoke the RAG pipeline with a question

question = "What is the capital of France?"

answer = pipeline.invoke({"question": question})

# Step 11: Print the answer

print(answer)

This code is also available in my GitHub repository.

The following is an example using ChromaDB.

from langchain.document_loaders import TextLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain.embeddings import SentenceTransformerEmbeddings

from langchain.vectorstores import Chroma

from langchain.retrievers import SemanticRetriever

from langchain.prompts import ChatPromptTemplate

from langchain.chat_models import ChatOpenAI

from langchain.schema.output_parser import StrOutputParser

from langchain.schema.runnable import RunnablePassthrough

# Step 1: Load the document and split it into chunks

loader = TextLoader("path/to/document.txt")

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

chunks = text_splitter.split_documents(documents)

# Step 2: Create embeddings

embedding_model = SentenceTransformerEmbeddings(model_name="all-MiniLM-L6-v2")

embeddings = embedding_model.embed(chunks)

# Step 3: Store embeddings in ChromaDB

db = Chroma.from_embeddings(embeddings)

# Step 4: Create a retriever

retriever = SemanticRetriever(db)

# Step 5: Define the prompt template

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

# Step 6: Create the language model

model = ChatOpenAI()

# Step 7: Define the output parser

output_parser = StrOutputParser()

# Step 8: Define the RAG pipeline

pipeline = {

"context": retriever,

"question": RunnablePassthrough(),

} | prompt | model | output_parser

# Step 9: Invoke the RAG pipeline with a question

question = "What is the main theme of the document?"

answer = pipeline.invoke({"question": question})

# Step 10: Print the answer

print(answer)Langchain provides a great framework for implementing RAG applications. Langchain offers a wide range of document loaders to fetch documents from multiple sources, including private S3 buckets and public websites. It also provides algorithms to transform documents, such as splitting large documents into smaller chunks, optimizing them for retrieval.

Langchain integrates with several embedding providers, allowing you to create embeddings that capture the semantic meaning of text and facilitate efficient retrieval. It supports various vector stores for storing and searching embeddings, enabling efficient storage and retrieval of document representations. It also supports different retrieval algorithms, including semantic search, parent document retriever, self-query retriever, and ensemble retriever, enhancing the performance of retrieval operations.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: