- Cisco Community

- Technology and Support

- Data Center and Cloud

- Unified Computing System (UCS)

- Unified Computing System Blogs

- Accelerate Distributed Deep Learning - Implementing GPU Accelerated Apache Spark 3.0 in Cisco Data I...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Executive Summary

Apache Spark has been the de-facto standard and world’s leading data analytics platform for implementing data science and machine learning framework.

Spark 3.0, with native GPU support, is something that almost every data scientist and data engineer have been waiting for a long time. To cater the needs of the same audience, Spark 3.0 brings GPU isolation and acceleration for Spark workloads.

In a hyper-scale environment, where datasets are growing at a tremendous velocity and peta bytes of data are becoming norm. For instance, in today’s environment, we are experiencing huge influx of data from several new use cases such as IoT, autonomous driving, smart cities, genomics, and financials, to name a few; multi-function analytics and transformation of this huge dataset into an actionable insight has become a complex trait. Furthermore, training on large amount of datasets is a time consuming task and particularly improving learning accuracy rate at the same time makes it even more daunting.

With Spark 3.0 revolutionary GPU acceleration, data scientists can train models on larger datasets and afford to retrain the model more frequently.

This opens up plethora of opportunities for businesses to process several tera-bytes of new data generated everyday, discover new insights, and gain competitive edge.

Apache Spark 3.0 with Cisco Data Intelligence Platform

This reference design implements Spark 3.0 in Cisco Data Intelligence Platform (CDIP) which is modernized infrastructure for data lake and AI.

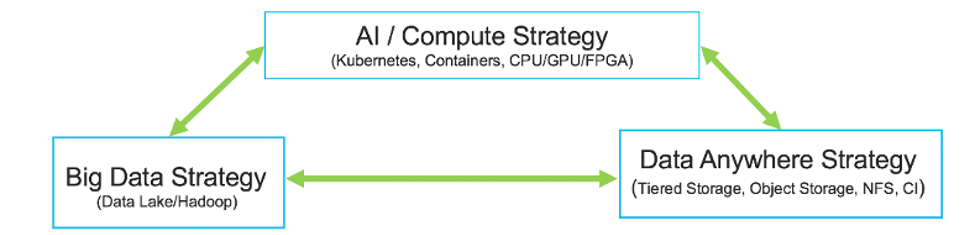

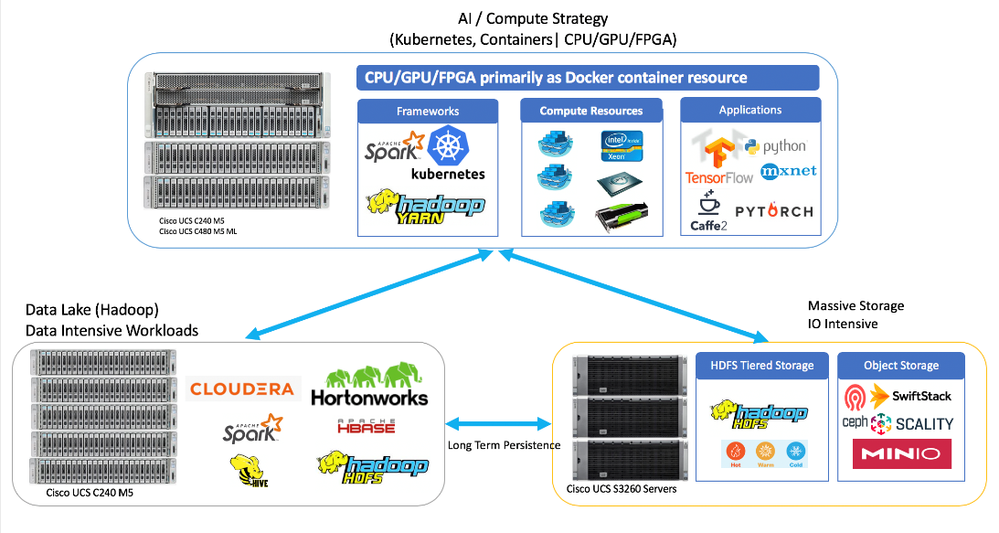

Cisco Data Intelligence Platform (CDIP) is a cloud scale architecture which brings together big data, AI/compute farm, and storage tiers to work together as a single entity while also being able to scale independently to address the IT issues in the modern data center. This architecture allows for:

- Extremely fast data ingest, and data engineering done at the data lake

- AI compute farm allowing for different types of AI frameworks and compute types (GPU, CPU, FPGA) to work on this data for further analytics

- A storage tier, allowing to gradually retire data which has been worked on to a storage dense system with a lower $/TB providing a better TCO

- Seamlessly scale the architecture to thousands of nodes with a single pane of glass management using Cisco Application Centric Infrastructure (ACI)

Cisco Data Intelligence Platform caters to the evolving architecture bringing together a fully scalable infrastructure with centralized management and fully supported software stack (in partnership with industry leaders in the space) to each of these three independently scalable components of the architecture including data lake, AI/ML and Object stores.

This design can be implemented by following the CVDs and solution brief mentioned below:

Cisco Validate Design

https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/cisco_ucs_cdip_cloudera.html

Solution Brief

Blog

Read our blog at Accelerate Distributed Deep Learning with Apache Spark

Spark 3.0 with NVIDIA offers GPU isolation and scheduling for Spark executors. With Hadoop 3.1, YARN can also schedule and use GPU resources. Not only that, YARN UI also shows GPU information both at the cluster level, as an aggregate resource, as well as at an individual node level. To easily manage the deep learning environment, YARN launches the Spark 3.0 applications with GPU.

Cisco Data Intelligence Platform is fully equipped to stage Spark 3.0 whether at the closet proximity to data lakes in Hadoop cluster or inside compute farm managed by YARN or Kubernetes.

Reference Architecture

Below figure shows the overall reference architecture of CDIP. With CDIP, you can start small and grow to 1000s of nodes without compromising performance. It offers linear scalability as you expand your infrastructure. Furthermore, you can scale any of the CDIP component e.g. data lake, compute farm, and/or storage strategy independently.

Industry Leading Validated Designs

Cisco has developed numerous industry leading Cisco Validated Designs (reference architectures) in the area of Big Data (CVDs with Cloudera, Hortonworks and MapR), compute farm with Kubernetes (CVD with RedHat OpenShift) and Object store (Scality, SwiftStack, Cloudian, and others).

This Cisco Data Intelligence Platform can be deployed in these variants:

· CDIP with Cloudera with Data Science Workbench (powered by Kubernetes) and Tiered Storage with Hadoop

· CDIP with Hortonworks with Apache Hadoop 3.1 and Data Science Workbench (powered by Kubernetes) and Tiered Storage with Hadoop

CDIP is an industry leading platform which proudly offers top world record performance benchmark numbers both on map reduce and Spark framework published with TPCx-HS. Results of those performance numbers can be viewed at http://www.tpc.org

Software and Hardware Versions

This setup used the following versions as shown in the table below

|

Software Components |

Version |

|

RHEL |

7.7 |

|

HDP |

3.0 |

|

HDFS |

3.1.1 |

|

YARN |

3.1.1 |

|

Java |

1.8.0_181 |

|

Spark |

3.0.0-preview2 for Hadoop 3.2 |

|

Scala |

2.12.10 |

|

Python |

3.6.10 |

|

Nvidia Driver |

418.126.02 |

|

CUDA |

10.1 |

|

Dkms |

2.7.1 |

|

CUDNN |

7.6.3.30 |

|

Tensor RT |

6.0.1.5 |

|

Tensorflow Hadoop |

1.15.0 |

|

Tensorflow |

2.1.0 |

Deployment Steps

Follow the deployment steps to setup Spark 3.0 in your Hadoop environment. In this reference design, two more nodes are added in the already setup Hadoop cluster running HDP 3.0 with HDFS 3.1 and YARN 3.1. this document also outlines the steps needed to add two more nodes with GPU in the existing cluster.

Download Spark 3.0

Download spark 3.0 and set it up in the client machine.

Use the following link to download the preview release as shown in the Figure

Download spark-3.0.0-preview2-bin-hadoop3.2.tgz which is Pre-built for Apache Hadoop 3.2 and later using the below command

# wget http://apache-mirror.8birdsvideo.com/spark/spark-3.0.0-preview2/spark-3.0.0-preview2-bin-hadoop3.2.tgz

Note: Although it mentions Hadoop 3.2 and later, however it worked as is without doing any changes in Hadoop 3.1 environment.

Unpack spark3

# tar -zxvf spark-3.0.0-preview2-bin-hadoop2.7.tgz

Copy configuration files from old spark to new spark if any previous version of Spark is already installed.

# cd /root/spark-3.0.0-preview2-bin-hadoop2.7/ # cp /usr/hdp/3.0.1.0-187/spark2/conf/* . export HADOOP_HOME=/usr/hdp/3.0.1.0-187/hadoop export HADOOP_CONF_DIR=/usr/hdp/3.0.1.0-187/hadoop/conf export YARN_CONF_DIR=/etc/hadoop/conf export SPARK_HOME=/root/spark-3.0.0-preview2-bin-hadoop3.2

Run Spark Shell

[root@rhel1 bin]# spark-shell

20/03/04 05:17:27 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://rhel1:4040

Spark context available as 'sc' (master = local[*], app id = local-1583327852814).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.0.0-preview2

/_/

Using Scala version 2.12.10 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_181)

Type in expressions to have them evaluated.

Type :help for more information.

scala> [root@rhel1 bin]#

Run spark-submit to further verify if the environment is all-set for Spark 3.0

./bin/spark-submit --class org.apache.spark.examples.SparkPi --master yarn --deploy-mode cluster --driver-memory 4g --executor-memory 2g --executor-cores 1 examples/jars/spark-examples*.jar 10

Use the below command in case if you need to kill all the application with RUNNING status for clean up before you submit more jobs. It is optional, it makes it easier to identify jobs in YARN GUI.

Kill all running app

# for x in $(yarn application -list -appStates RUNNING | awk 'NR > 2 { print $1 }'); do yarn application -kill $x; done

Get TensorFlowOnSpark

Get TensorFlowOnSpark by running the following commands:

# git clone https://github.com/yahoo/TensorFlowOnSpark.git # cd TensorFlowOnSpark/ # zip -r tfspark.zip tensorflowonspark # ls

# hdfs dfs -ls /user # hdfs dfs -ls /user/root

Upload the zip to HDFS

# hdfs dfs -put tfspark.zip /user/root/. # hdfs dfs -ls /user/root

Get tensorflow-hadoop

Either download the `tensorflow-hadoop` jar from the public [maven repository](https://mvnrepository.com/artifact/org.tensorflow/tensorflow-hadoop/) or [build it from source](https://github.com/tensorflow/ecosystem/tree/master/hadoop). Put the jar onto HDFS for easier access:

# wget https://repo1.maven.org/maven2/org/tensorflow/tensorflow-hadoop/1.15.0/tensorflow-hadoop-1.15.0.jar

Upload the .jar file to HDFS

# hdfs dfs -put tensorflow-hadoop-1.15.0.jar /user/root/.

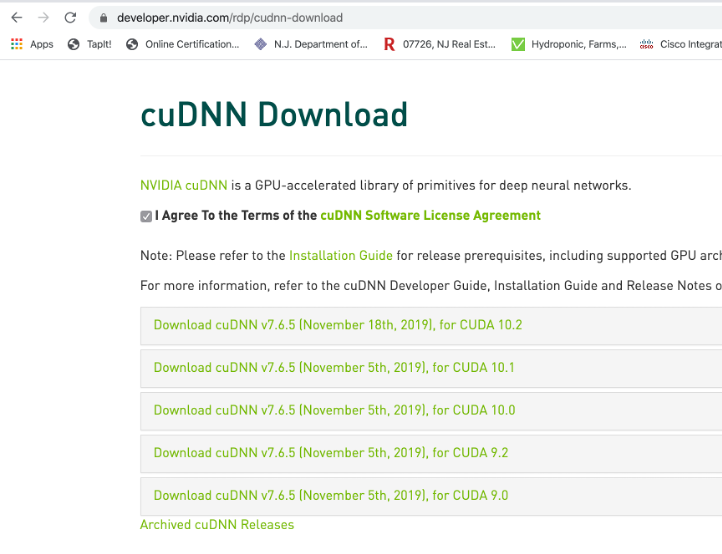

Download cuDNN

cuDNN can be downloaded from the following link. This requires user credentials. If you don't have one, you have to create it.

https://developer.nvidia.com/rdp/cudnn-download

Download Redhat version in your client machine. In this setup, we downloaded cuDNN for CUDA 10.1

https://developer.nvidia.com/rdp/cudnn-download

# tar -xvzf cudnn-10.1-linux-x64-v7.6.5.32.tgz # cd cuda/ # zip -r cudnn.zip *

Upload the cudnn.zip file to HDFS

# hdfs dfs -put cudnn.zip /user/root/.

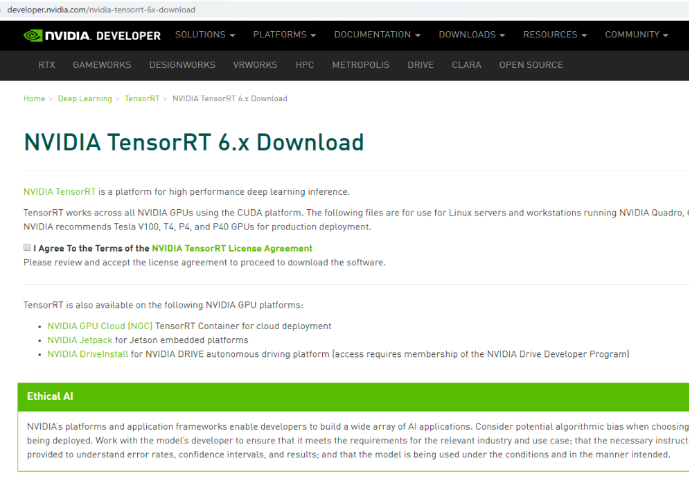

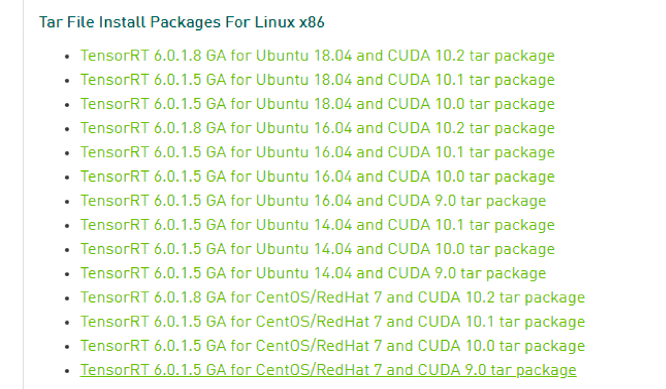

Download TensorRT

Download TensorRT from the NVIDIA developer site.

https://developer.nvidia.com/nvidia-tensorrt-6x-download

Download TensorRT 6.0.1.5 for CentOS/RedHat 7 and CUDA 10.1 tar package

# tar -xvzf TensorRT-6.0.1.5.CentOS-7.6.x86_64-gnu.cuda-10.1.cudnn7.6.tar.gz # cd TensorRT-6.0.1.5/ # zip -r TensorRT.zip *

Upload it to HDFS as below

# hdfs dfs -put TensorRT.zip /user/root/. # hdfs dfs -ls /user/root/

Note: Currently `tensorflow-gpu` [requires](https://www.tensorflow.org/install/gpu#software_requirements) `cuDNN >= 7.6` and `TensorRT == 6.0`.

Install Python

Install Python 3 in your client machine.

# yum install -y openssl openssl-devel

# export PYTHON_ROOT=~/Python

# export VERSION=3.6.10

# wget https://www.python.org/ftp/python/${VERSION}/Python-${VERSION}.tgz

# tar -xvf Python-${VERSION}.tgz

# cd Python-${VERSION}

# ./configure --prefix="${PYTHON_ROOT}"

# make

# make install

# cd ..

# cd =${PYTHON_ROOT}

# bin/pip3 install -U pip setuptools

# bin/pip3 install tensorflow==2.1.0 tensorflow-datasets==2.0.0 tensorflow-gpu==2.1.0 packaging

Upload the zip file to HDFS

# zip -r Python.zip * # hdfs dfs -put Python.zip /user/root/.

MNIST Data Setup

Download original data

# mkdir /root/mnist # cd /root/mnist # curl -O "http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz" # curl -O "http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz" # curl -O "http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz" # curl -O "http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz" # zip -r mnist.zip *

Upload mnist zip file to HDFS

# hdfs dfs –put mnist.zip /user/root/. # cd ..

Prep the New Server

These instructions will prepare two new servers and add it the already running Hadoop cluster via Ambari dashboard.

Update the server using the following. This command requires to register the server with Red Hat subscription. Yum update requires servers to be rebooted.

# yum -y update # systemctl stop firewalld # systemctl disable firewalld

Disable selinux in etc/selinux/config

Install java

# rpm -ivh jdk-8u181-linux-x64.rpm

Set java alternatives

# for item in java javac javaws jar jps javah javap jcontrol jconsole jdb; do rm -f /var/lib/alternatives/$item alternatives --install /usr/bin/$item $item /usr/java/jdk1.8.0_181-amd64/bin/$item 9 alternatives --set $item /usr/java/jdk1.8.0_181-amd64/bin/$item done

set java home

# export JAVA_HOME=/usr/java/jdk1.8.0_181-amd64 [root@spark02 ~]# java -version java version "1.8.0_181" Java(TM) SE Runtime Environment (build 1.8.0_181-b13) Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode) [root@spark02 ~]#

Reboot the server

# reboot

Verify red hat release version

[root@spark02 ~]# cat /etc/redhat-release Red Hat Enterprise Linux Server release 7.7 (Maipo) [root@spark02 ~]#

Install NVIDIA Driver

Verify gcc version

[root@spark02 ~]# gcc --version gcc (GCC) 4.8.5 20150623 (Red Hat 4.8.5-39) Copyright (C) 2015 Free Software Foundation, Inc. This is free software; see the source for copying conditions. There is NO warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

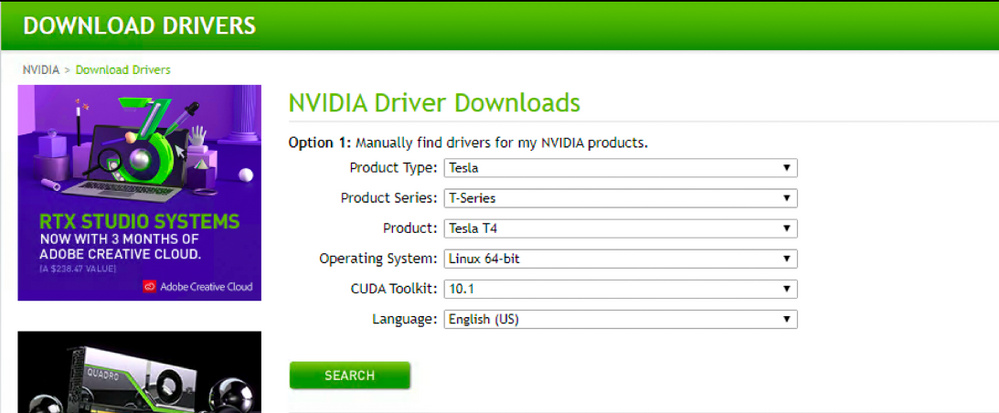

Install nvidia-driver as below. Download this file from http://www.nvidia.com/Download/ based on your GPU card as shown below and select 10.1 CUDA Toolkit

[root@spark02 ~]# rpm -ivh nvidia-driver-local-repo-rhel7-418.126.02-1.0-1.x86_64.rpm warning: nvidia-driver-local-repo-rhel7-418.126.02-1.0-1.x86_64.rpm: Header V3 RSA/SHA512 Signature, key ID 7fa2af80: NOKEY Preparing... ################################# [100%] Updating / installing... 1:nvidia-driver-local-repo-rhel7-41################################# [100%] [root@spark02 ~]#

Install dkms

http://rpmfind.net/linux/rpm2html/search.php?query=dkms

# yum install dkms-2.7.1-1.el7.noarch.rpm

Install CUDA 10.1

# wget http://developer.download.nvidia.com/compute/cuda/10.1/Prod/local_installers/cuda-repo-rhel7-10-1-local-10.1.243-418.87.00-1.0-1.x86_64.rpm # rpm -i cuda-repo-rhel7-10-1-local-10.1.243-418.87.00-1.0-1.x86_64.rpm # yum clean all # yum -y install nvidia-driver-latest-dkms # yum -y install cuda

Download cuDNN for 10.1 CUDA

# wget https://developer.nvidia.com/compute/machine-learning/cudnn/secure/7.6.3.30/Production/10.1_20190822/cudnn-10.1-linux-x64-v7.6.3.30.tgz # tar -xzvf cudnn-10.1-linux-x64-v7.6.3.30.tgz # cp /root/cuda/include/cudnn.h /usr/local/cuda-10.1/include # cp /root/cuda/lib64/libcudnn* /usr/local/cuda-10.1/lib64 # chmod a+r /usr/local/cuda-10.1/include/cudnn.h /usr/local/cuda-10.1/lib64/libcudnn*

Note: Please find appropriate cudnn. It is also possible that URL could change over time.

- Add CUDA in PATH and LD_LIBRARY_PATH variable in all the GPU nodes.

- The PATH and LD_LIBRARY_PATH variable need to include /usr/local/<cuda>/bin:

root@spark02 ~]# export PATH=/usr/local/cuda-10.1/bin${PATH:+:${PATH}}

[root@spark02 ~]# export LD_LIBRARY_PATH=/usr/local/cuda-10.1/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

Reboot the server for the driver to take effect.

# reboot

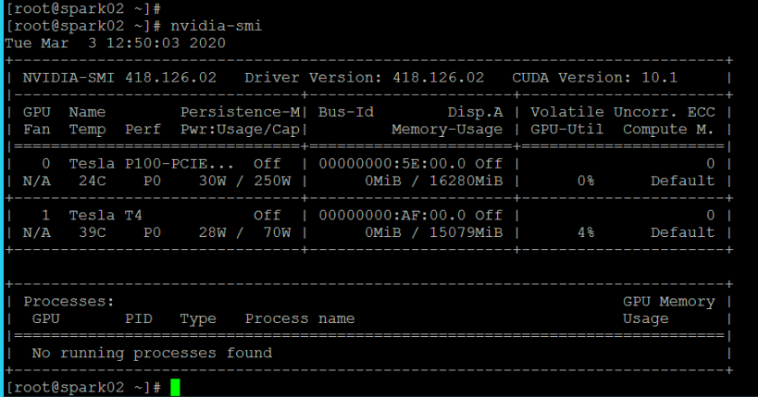

After the reboot run the following

# nvidia-smi

Create the following listGPU.sh file in the servers with GPU which will be running Spark driver and/or Spark Executors. Run the list GPU

[root@spark02 ~]# cat listGPU.sh

#!/bin/bash

ADDRS=`nvidia-smi --query-gpu=index --format=csv,noheader | sed -e ':a' -e 'N' -e'$!ba' -e 's/\n/","/g'`

echo {\"name\": \"gpu\", \"addresses\":[\"$ADDRS\"]}

[root@spark02 ~]#

[root@spark02 ~]# ./listGPU.sh

{"name": "gpu", "addresses":["0","1"]}

Run the following script.

For more details, please follow the CVD at

Note: If you don't run the following, NodeManager service may not start…

[root@spark02 ~]# [root@spark02 ~]# ./hadoop-yarn.sh [root@spark02 ~]# [root@spark02 ~]# [root@spark02 ~]# cat hadoop-yarn.sh mkdir -p /sys/fs/cgroup/cpu/hadoop-yarn chown -R yarn /sys/fs/cgroup/cpu/hadoop-yarn mkdir -p /sys/fs/cgroup/memory/hadoop-yarn chown -R yarn /sys/fs/cgroup/memory/hadoop-yarn mkdir -p /sys/fs/cgroup/blkio/hadoop-yarn chown -R yarn /sys/fs/cgroup/blkio/hadoop-yarn mkdir -p /sys/fs/cgroup/net_cls/hadoop-yarn chown -R yarn /sys/fs/cgroup/net_cls/hadoop-yarn mkdir -p /sys/fs/cgroup/devices/hadoop-yarn chown -R yarn /sys/fs/cgroup/devices/hadoop-yarn [root@spark02 ~]#

Note: Driver 418.126.02 and CUDA 10.1 does not work with RHEL 7.5 constantly gives error on nvidia-smi command such as “NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

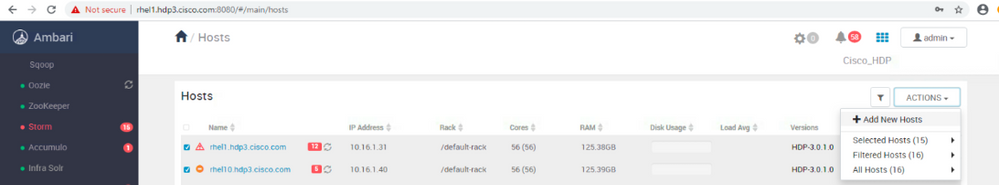

Add Node to the cluster

In Ambari dashboard, add these newly setup GPU nodes in the cluster. In the reference design, we not adding these nodes to be part of HDFS cluster. However, these nodes will be running YARN NodeManager and all client’s configuration will be installed.

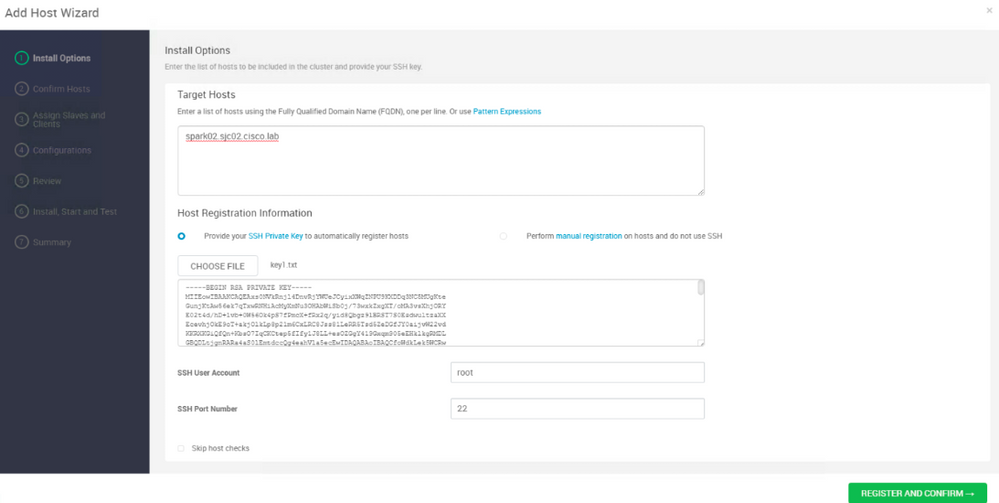

Use the Add Host Wizard to add the nodes. You can add multiple nodes at the same time. Here we are showing how one node is added. For multiple nodes to be added at the same, specify their FQDN per line as shown below.

Specify SSH private key and click REGISTER AND CONFIRM

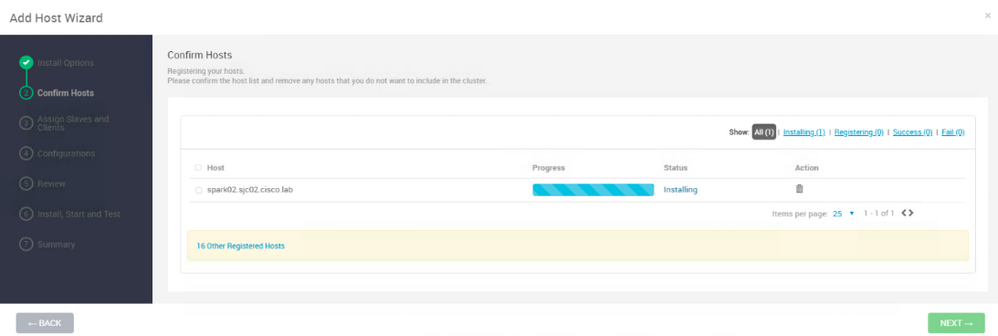

Click Next

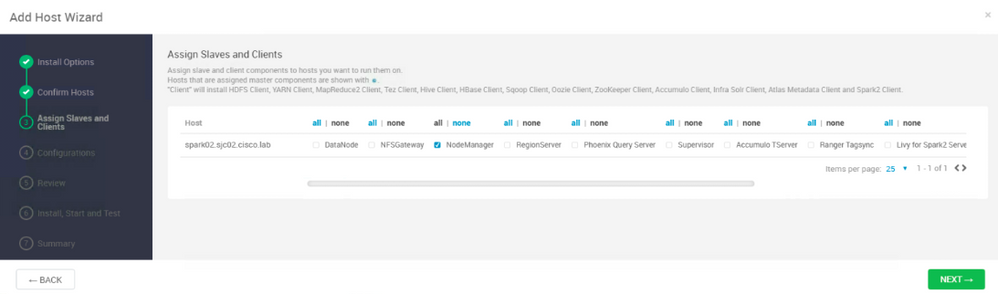

Select NodeManger and client. Click Next

Select GPU-Nodes in YARN configuration group. In our environment, GPU-Nodes is a separate configuration group for YARN specific configuration. This is a mixed cluster where there are nodes with GPU and without GPU. These details are outlined in the CVD as shared earlier in this document.

Click Next

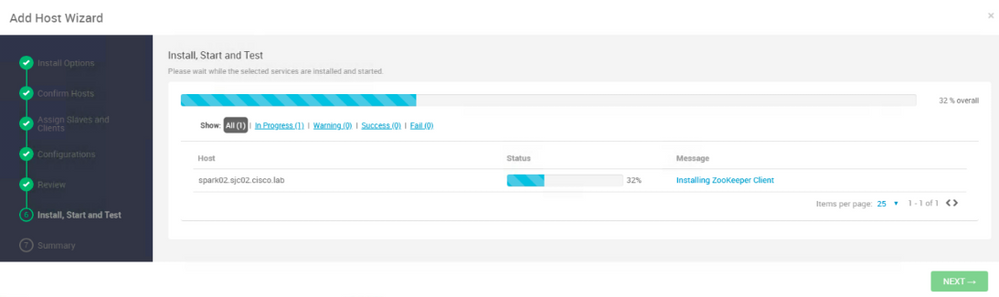

Click Deploy

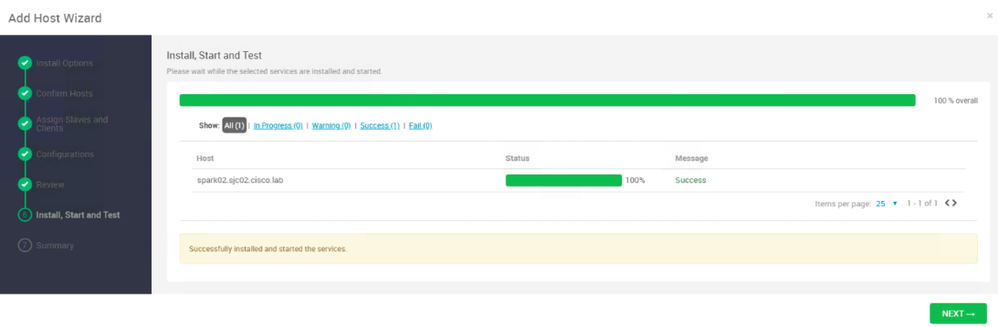

Install will progress. Watch the installation for any failures. Fix it and re-run the install.

Click Next to complete the installation

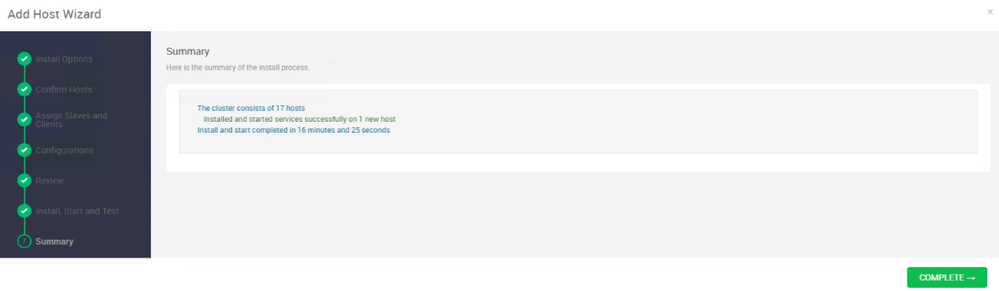

Click COMPLETE

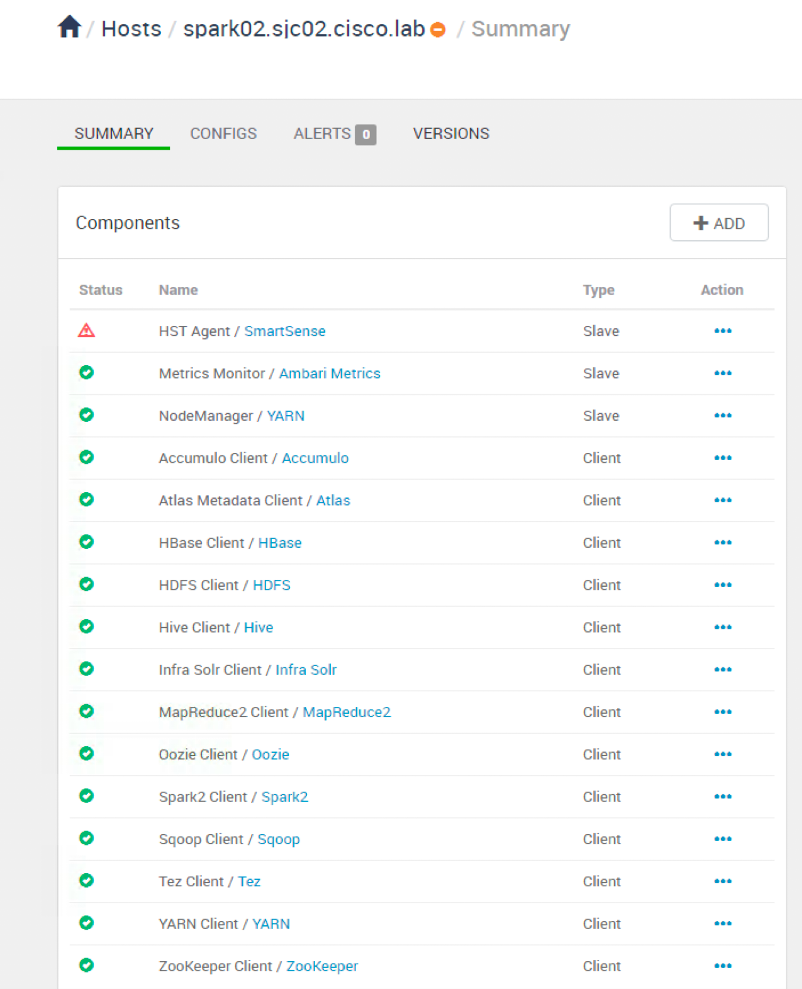

Once the installation is complete, newly added host will show up under Hosts section. Verify all the required services are running as shown below.

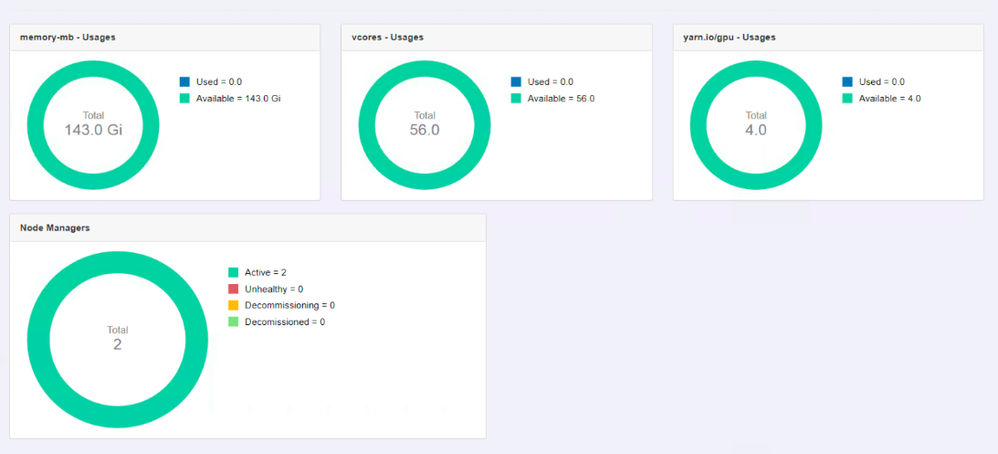

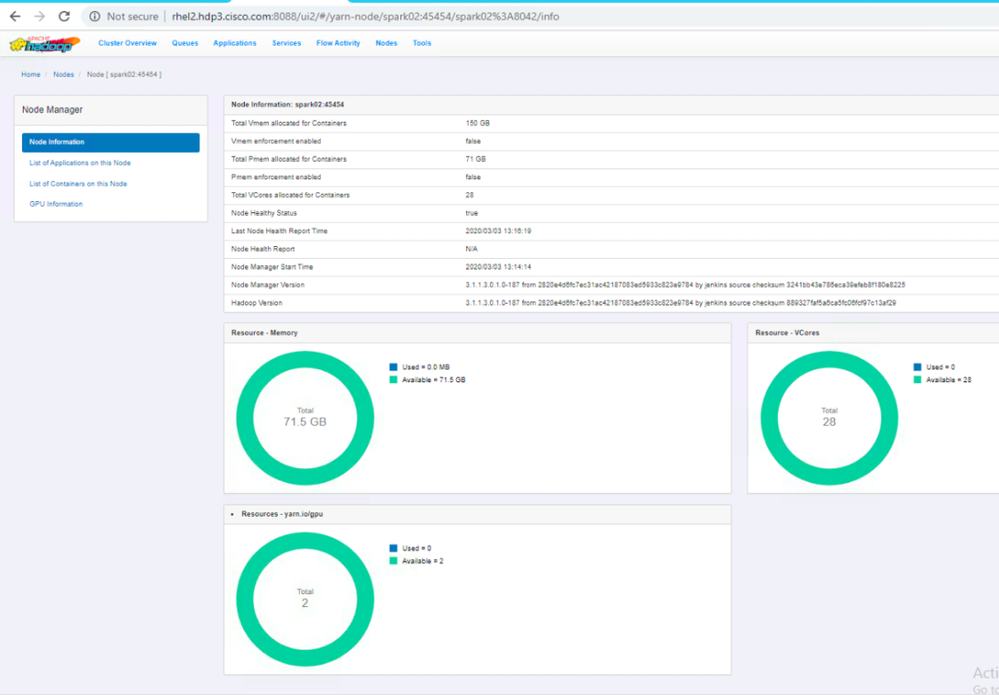

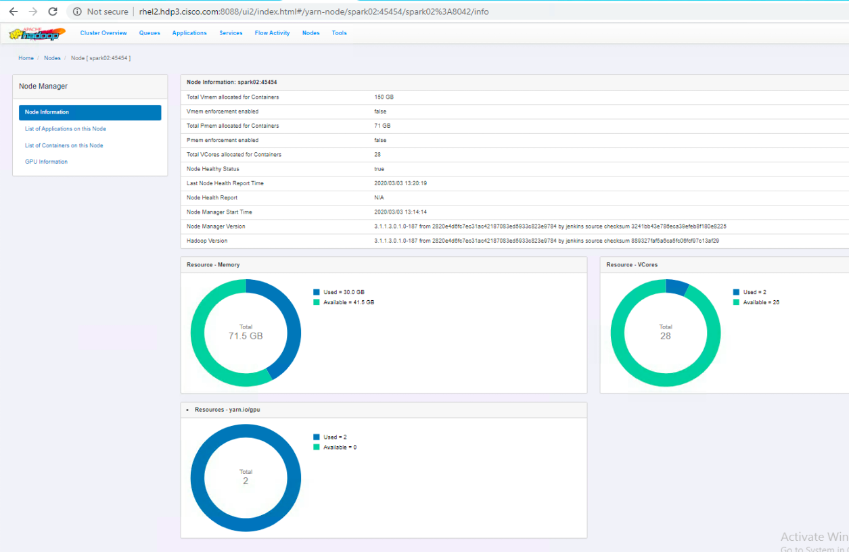

Launch the YARN GUI to verify if the node is added in YARN cluster and all of its resources are added in the cluster overview.

Also click the node in the Nodes section to individually verify the nodes. Verify Memory, CPU, and GPU resources

Run Spark Job

export HDFS_PREFIX=hdfs:///user/root

export TFOS_PATH=/root/TensorFlowOnSpark

export TFHDP_PATH=${HDFS_PREFIX}/tensorflow-hadoop-1.15.0.jar

export CUDA_HOME=/usr/local/cuda-10.1

export LIB_CUDA=${CUDA_HOME}/lib64

spark-submit \

--master yarn \

--deploy-mode cluster \

--num-executors 4 \

--executor-memory 4G \

--jars ${TFHDP_PATH} \

--archives ${HDFS_PREFIX}/Python.zip#Python,${HDFS_PREFIX}/TensorRT.zip#trt, ${HDFS_PREFIX}/mnist.zip#mnist \

--conf spark.executorEnv.LD_LIBRARY_PATH=$LIB_CUDA:trt/lib \

--driver-library-path=$LIB_CUDA \

--conf spark.executorEnv.CLASSPATH=$(hadoop classpath --glob) \

--conf spark.pyspark.python=./Python/bin/python3 \

/root/TensorFlowOnSpark/examples/mnist/mnist_data_setup.py \

--output ${HDFS_PREFIX}/mnist4

It converts the datasets into CSV

This will create an output file which is datasets in CSV format as shown below.

[root@spark01 ~]# hdfs dfs -ls /user/root/mnist4/csv/train Found 11 items -rw-r--r-- 3 root hdfs 0 2020-03-03 08:18 /user/root/mnist4/csv/train/_SUCCESS -rw-r--r-- 3 root hdfs 9350237 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00000 -rw-r--r-- 3 root hdfs 11232549 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00001 -rw-r--r-- 3 root hdfs 11204430 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00002 -rw-r--r-- 3 root hdfs 11229768 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00003 -rw-r--r-- 3 root hdfs 11215700 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00004 -rw-r--r-- 3 root hdfs 11216704 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00005 -rw-r--r-- 3 root hdfs 11230816 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00006 -rw-r--r-- 3 root hdfs 11219885 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00007 -rw-r--r-- 3 root hdfs 11213064 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00008 -rw-r--r-- 3 root hdfs 10462841 2020-03-03 08:18 /user/root/mnist4/csv/train/part-00009 [root@spark01 ~]#

GPU discovery Script

Make sure the GPU discovery script in deployed in all the GPU nodes in the cluster.

The GPU resource discovery script is used during the launch of an executor to find available GPU resources and allocate the executor GPUs based off the JSON object of GPUs returned.

For more information, please refer to the [Spark Documentation](https://spark.apache.org/docs/3.0.0-preview/running-on-yarn.html#resource-allocation-and-configuration-overview).

GPU discovery script is mentioned below:

[root@spark01 ~]# cat listGPU.sh

#!/bin/bash

ADDRS=`nvidia-smi --query-gpu=index --format=csv,noheader | sed -e ':a' -e 'N' -e'$!ba' -e 's/\n/","/g'`

echo {\"name\": \"gpu\", \"addresses\":[\"$ADDRS\"]}

[root@spark01 ~]#

Execute the training

Execute the training by utilizing the converted CSV dataset and specify –image_labels parameters in the below command.

export HDFS_PREFIX=hdfs:///user/${USER}

export DISCOVERY_SCRIPT_PATH=${HOME}

export DISCOVERY_SCRIPT_NAME=listGPU.sh

export TFOS_PATH=${HOME}/TensorFlowOnSpark

export GPUS_PER_TASK=1

export GPUS_PER_EXECUTOR=1

export NUM_EXECUTORS=4

# specify cuda

export CUDA_HOME=/usr/local/cuda-10.1

export LIB_CUDA=${CUDA_HOME}/lib64

export JAVA_HOME=/usr/java/jdk1.8.0_181-amd64

# LIB_JVM looks for libjvm.so – Make sure the path below has that file

export LIB_JVM=${JAVA_HOME}/jre/lib/amd64/server

export HADOOP_HOME=/usr/hdp/3.0.1.0-187/hadoop

# export LIB_HDFS=${HADOOP_HOME}/lib/native

# this looks for libhdfs.so make sure LIB_HDFS has that file

export LIB_HDFS=/usr/hdp/3.0.1.0-187/usr/lib/

spark-submit \

--conf spark.executor.resource.gpu.amount=${GPUS_PER_EXECUTOR} \

--conf spark.task.resource.gpu.amount=${GPUS_PER_TASK} \

--conf spark.executorEnv.EXECUTOR_GPUS=${GPUS_PER_EXECUTOR} \

--conf spark.executor.resource.gpu.discoveryScript=./${DISCOVERY_SCRIPT_NAME} \

--files ${DISCOVERY_SCRIPT_PATH}/${DISCOVERY_SCRIPT_NAME} \

--master yarn \

--deploy-mode cluster \

--num-executors ${NUM_EXECUTORS} \

--executor-memory 27G \

--conf spark.executor.memoryOverhead=100G \

--py-files ${HDFS_PREFIX}/tfspark.zip \

--conf spark.dynamicAllocation.enabled=false \

--conf spark.yarn.maxAppAttempts=1 \

--archives ${HDFS_PREFIX}/Python.zip#Python,${HDFS_PREFIX}/cudnn.zip#cudnn,${HDFS_PREFIX}/TensorRT.zip#trt \

--conf spark.pyspark.python=./Python/bin/python3 \

--conf spark.executorEnv.LD_LIBRARY_PATH=${LIB_CUDA}:${LIB_JVM}:${LIB_HDFS}:cudnn/lib64:trt/lib \

--conf spark.executorEnv.CLASSPATH=$(hadoop classpath --glob) \

--driver-library-path=${LIB_CUDA}:${LIB_JVM}:cudnn/lib64:trt/lib:${LIB_HDFS} \

${TFOS_PATH}/examples/mnist/keras/mnist_spark.py \

--images_labels mnist4/csv/train \

--model_dir ${HDFS_PREFIX}/mnist_model

Solution Validation

From 2 nodes: 4 executors, 1 GPU/Executor, 1 GPU/Task

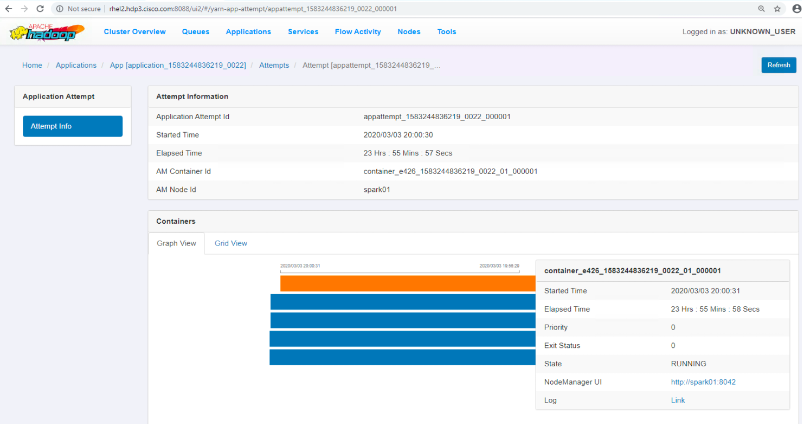

YARN GUI in Application tab showing Spark driver container running in app master and four executors.

YARN GUI – Application Tab

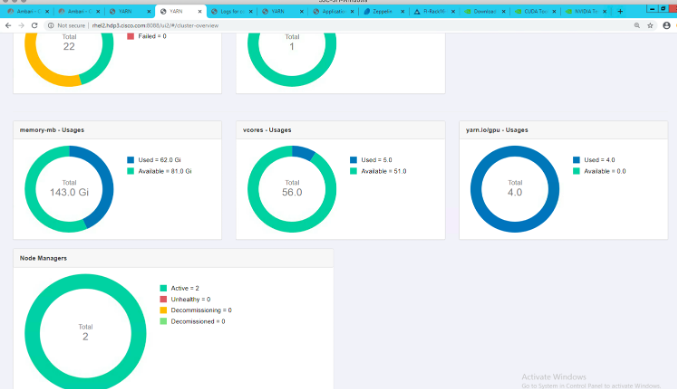

In YARN GUI, Cluster Overview tab show that all the four GPU have been utilized and allocated to the submitted job.

YARN GUI – Cluster Overview Tab

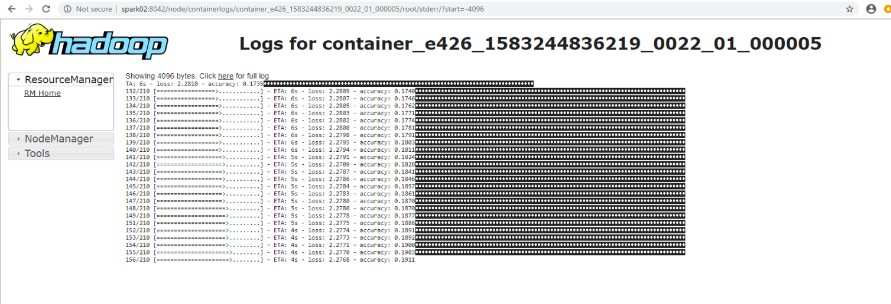

Container Log Output

Log output of the container performing training

At the node level, we can see the GPU is being used by the application. As we can see in the below figure, both the GPUs are allocated to the job and being used.

YARN GUI – Nodes – Node Information

Conclusion

In a nutshell, given the popularity of Spark framework among data scientists, it is obvious Spark will continue to gain momentum. There will be more and more Spark applications which will be transformed and accelerated using GPU as a resource. Spark 3.0 supports GPU isolation and scheduling for executors fully managed by YARN. Furthermore, Spark 3.0 enhances Kubernetes integration by providing new shuffle service for Spark on Kubernetes that allows dynamic scale up and down. Cisco Data Intelligence Platform offers ideal infrastructure framework for staging and running deep learning training jobs in Spark 3.0

Demo

Demo Highlights

- How data scientists can take advantage of Apache Spark 3.0 to launch a massive deep learning workload running a TensorFlow application.

- How YARN pools and schedules GPUs that can be utilized across nodes for a Spark 3.0 distributed job.

- How data scientists can monitor the progress of their jobs.

- How IT can monitor the resources consumed.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: