- Cisco Community

- Technology and Support

- Data Center and Cloud

- Unified Computing System (UCS)

- Unified Computing System Blogs

- Persistent Storage with Cisco Container Platform

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

One of the great benefits of deploying Cisco's Container Platform on Cisco Hyperflex is the ability to get persistent storage for containers out of the box with minimal configuration. This works well for containers where you require the data to survive on the event of a terminated pod, VM, or an error where the host goes down, heaven forbid!

With the recent release of CCP 2.2 and HX 3.5 this is super simple and works great! Let's illustrate how all of this works by starting at the bottom and working our way up to an application.

Volumes

Before storage can be used it must be presented to Kuberentes. The way this is done is through volumes. Most Kubernetes pods get an ephemeral volume attached to the pod, or just a directory with perhaps some data. When the pod is destroyed or restarted the ephemeral volume is destroyed and the data is lost.

Volumes are ways that can specify what storage to mount. The volumes have their own lifetime independent from the pod and can be destroyed or created whenever, depending on the driver. Typically, an administrator would create a volume, and then the container would specify that it requires this volume to be mounted. Let's create a simple NGINX example to see what this looks like. We will use the following YAML file and call it ng1-hostPath.yaml:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

run: ng1

name: ng1

namespace: default

spec:

replicas: 1

selector:

matchLabels:

run: ng1

template:

metadata:

labels:

run: ng1

spec:

nodeSelector:

kubernetes.io/hostname: <the hostname of one of your workers.>

containers:

- image: nginx

name: ng1

volumeMounts:

- name: ngx

mountPath: /usr/share/nginx/html/

volumes:

- name: ngx

hostPath:

type: DirectoryOrCreate

path: /ng1

---

apiVersion: v1

kind: Service

metadata:

labels:

run: ng1

name: ng1

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: ng1

sessionAffinity: None

type: LoadBalancer

Replace the hostname value with a random host in your cluster. (For CCP, my hostname was: istio2-worker29ceaca970)

This YAML file gives us a deployment and a service. We can see the IP address of where the service is running by running:

kubectl get svc

You will see the load balancer IP address similar to what my configuration shows:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE analytics-agent ClusterIP 10.99.210.47 <none> 9090/TCP 1d kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 1d ng1 LoadBalancer 10.105.7.95 10.99.104.37 80:32017/TCP 1m

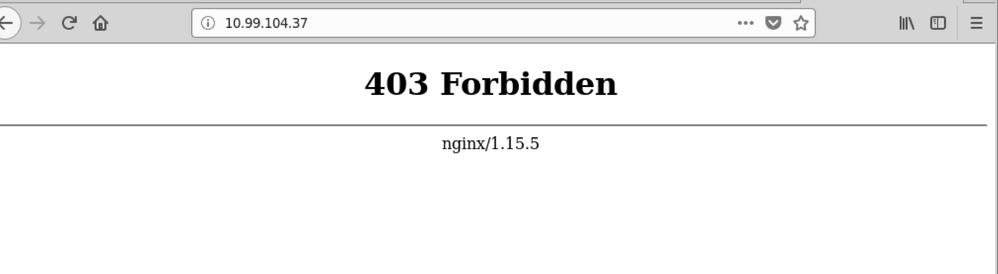

Now, we see that the external IP is 10.99.104.37. Navigating to this IP address in our browser we get:

This is expected because there is nothing in the directory and nginx is configured by default to not let you list the contents of the directory. Let's add something to it. First, find the name of the container that's running:

kubectl get pods | grep ng1

Take that name and sub it into the command below:

kubectl exec -it ng1-77ccc7dc74-xbbpv /bin/bash

# now you will be on the pod, type this command: echo "Hello Volume" >/usr/share/nginx/html/index.html

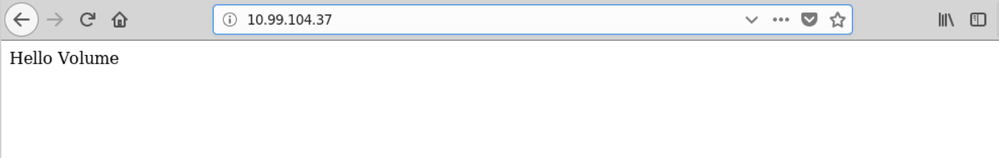

From here we now can refresh, the webpage and see the changes have been made:

Cool. Now let's delete the entire configuration:

kubectl delete -f ng1.yaml

After it is gone, we can then create the yaml file again and see that the data was preserved:

kubectl create -f ng1-hostPath.yaml

Refreshing the browser gives us the same cheery, "Hello Volume" message.

We can, in fact, go to the host node we specified and modify the contents of /ng1 directly. We could use a configuration management tool like Chef to keep these /ng1 directories in sync on each of the worker nodes. That way all nodes could serve the same stuff.

The problem with those approaches is that we either introduce complexity or points of failure. Lucky for us, Kubernetes provides another way.

Persistent Volumes

If you noticed in the preceding configurations volumes are tied directly to the configuration of the Pod. Persistent volumes differ from plain volumes in that they are defined as an entity of their own. With volumes, they needed to be pre-deployed by an administrator before the user could employ them. Persistent volumes, on the other hand can either be pre-deployed (like the volumes of yore) or dynamically allocated. The capabilities of whether a persistent volume can be dynamically created depend upon the PV plugin. Both Volumes and Persistent Volumes have the idea of plugins. We used the hostPath plugin previously. We can now use Hyperflex flexdriver plugin to create a persistent volume to be used by our application.

Storage Classes

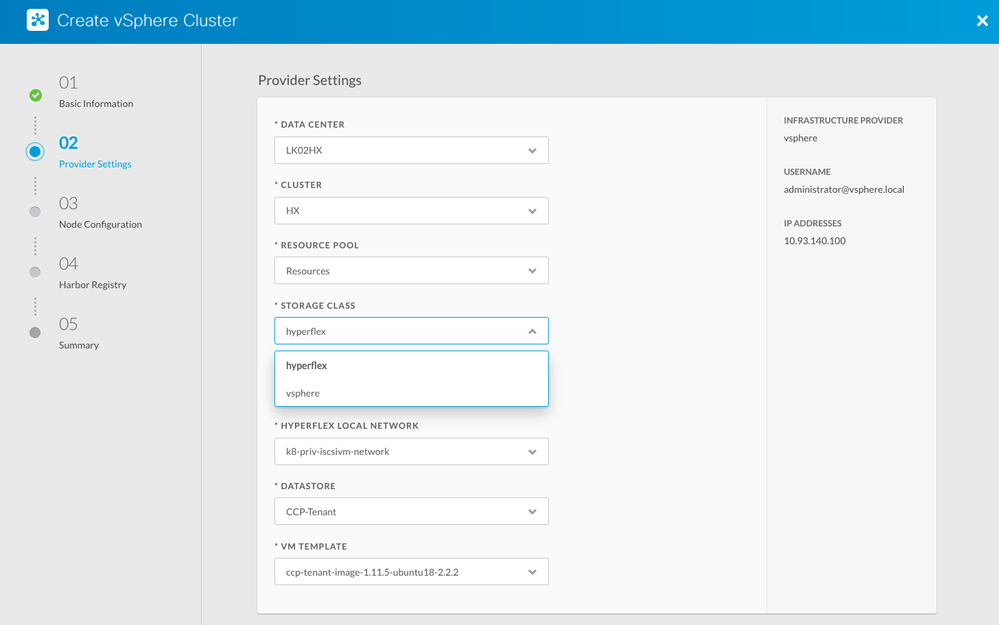

Kubernetes has the concept of a StorageClass. StorageClasses come with a provisioner which is abstracted away from the user. The provisioner is platform dependent. You can see a list of different provisioners here. Think of a storage class as a storage plugin to automatically provision storage for users who want to use it. An administrator can create a default storage class so that when persistent volumes are requested they can automatically be provisioned. The way this is done in Cisco Container Platform is that in step 2 we determine which storage class we want to select. If you choose hyperflex then Kubernetes will use the flexvolume plugin to communicate with Hyperflex to carve out storage.

Using HX Persistent Volumes

The HX Kubernetes guide has lots of info as to how the HX persistent volume plugin works. After you install CCP and then get a tenant cluster up and running with Hyperflex as the default storage class we can use it to create persistent volumes for a MariaDB server.

Let's create a YAML file with maria db information called mariadb-StatefuleSet.yaml based off of another yaml found on github.

apiVersion: v1

kind: Service

metadata:

name: mariadb

labels:

app: mariadb

spec:

ports:

- port: 3306

name: mysql

selector:

app: mariadb

tier: mysql-cache

clusterIP:

type: LoadBalancer

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

creationTimestamp: null

labels:

app: mariadb

tier: mysql-cache

name: mariadb

spec:

selector:

matchLabels:

app: mariadb

tier: mysql-cache

serviceName: mariadb

template:

metadata:

creationTimestamp: null

labels:

app: mariadb

tier: mysql-cache

spec:

containers:

- env:

- name: MYSQL_ROOT_PASSWORD

value: f00bar123

#valueFrom:

# secretKeyRef:

# key: mariadb-pass-root.txt

# name: mariadb-pass-root

image: mariadb:10.2.12

imagePullPolicy: IfNotPresent

name: mariadb

ports:

- containerPort: 3306

name: mysql

protocol: TCP

volumeMounts:

- mountPath: /var/lib/mysql

name: mariadb-persistent-storage

restartPolicy: Always

updateStrategy:

type: RollingUpdate

volumeClaimTemplates:

- metadata:

creationTimestamp: null

labels:

app: mariadb

name: mariadb-persistent-storage

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

selector:

matchLabels:

app: mariadb

storageClassName: hyperflex

status:

replicas: 0

In this example we use the storageClassName: hyperflex to create a persistent volume claim template. The persistent volume claim template is used for stateful sets in which if there were multiple replicas each would use the template to create a persistent volume claim. While you should probably create a secret file with the mariadb info, we can test it by statically setting the password.

Now we can use client mariadb utils to login and create a database. First, get the IP address of the database by running:

kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 1h mariadb LoadBalancer 10.109.13.181 10.93.140.132 3306:31607/TCP 17m ngx1 LoadBalancer 10.108.108.5 10.93.140.130 80:30123/TCP 18m

Here we see that 10.93.140.132 is the external IP (LoadBalancer). Normally we would not expose the MariaDB outside of the cluster, but for this experiment we can do so.

mysql -u root -h 10.93.140.132 -pf00bar123

MariaDB [(none)]> show databases;

+---------------------+

| Database |

+---------------------+

| #mysql50#lost+found |

| information_schema |

| mysql |

| performance_schema |

+---------------------+

MariaDB [(none)]> create database test1

-> ;

Query OK, 1 row affected (0.069 sec)

MariaDB [(none)]> quit;

Bye

Now we can delete the pod and verify that the storage remains:

kubectl delete pod mariadb-0

The pod will be recreated immediately. By logging into mariadb again we can see that the test1 database remains:

mysql -u root -h 10.93.140.132 -pf00bar123 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 8 Server version: 10.2.12-MariaDB-10.2.12+maria~jessie mariadb.org binary distribution Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> show databases; +---------------------+ | Database | +---------------------+ | #mysql50#lost+found | | information_schema | | mysql | | performance_schema | | test1 | +---------------------+ 5 rows in set (0.070 sec) MariaDB [(none)]>

Stateful sets, persistent volumes, very simple with Hyperflex and CCP!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Find answers to your questions by entering keywords or phrases in the Search bar above. New here? Use these resources to familiarize yourself with the community: